Building a Storj Node on CentOS

Be the decentralized cloud. Contribute your own storage node. https://www.storj.io/node

Storj is an S3-compatible platform and suite of decentralized applications that allows you to store data in a secure and decentralized manner. The more bandwidth and the more storage your make available, the more you get paid.

The Linux install uses a Docker Container. I and others appear to fail to simply replace Docker with Podman. For my first successful attempt I went with CentOS with a view to move to Podman and finally RHEL 8 Podman. This guide documents my first successful attempt and uses CentOS with Docker.

References:

- Host a node: https://docs.storj.io/node/

- ERC-20 compatible wallet: https://app.mycrypto.com/download-desktop-app

- Migrate to a new host: https://docs.storj.io/node/resources/faq/migrate-my-node

Requirements

https://docs.storj.io/node/before-you-begin/prerequisites

- Storage:

- / 8GB

- /home 552GB (500GB + 10% overhead) {Storej} + user files

- CPU: 1

- RAM: 2GB

- OS: CentOS 8 Server - minimal install

Procedure

I am going to recommend a different order for setup to make it more streamline / linear in process. Skip creating an “Identity” until after Docker is installed and the unprivileged user (eg. storj) has been created.

Setup all the Things Outside the Storj Node

Get setup with an identity at STORJ: https://www.storj.io/host-a-node

The fourth step is installing the “CLI”. It will help you cover off the prerequisites. Reminder that depending on your firewall you might need to define both:

- NAT / Port Forward and

- Firewall rule to allow the port forward, doh!

Docker Installation

Pick you Linux Distro https://docs.storj.io/node/setup/cli/docker

Or go straight to the Docker CentOS instrucitons: https://docs.docker.com/engine/install/centos/

yum update

reboot

yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine

yum install -y yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install docker-ce docker-ce-cli containerd.io

systemctl start docker

docker run hello-world

groupadd docker

systemctl enable docker.service

systemctl enable containerd.service

cat >> /etc/docker/daemon.json <EOT

{

"log-driver": "local",

"log-opts": {

"max-size": "10m"

}

}

EOT

firewall-cmd --add-port 28967/udp --add-port 28967/tcp

firewall-cmd --add-port 28967/udp --add-port 28967/tcp --permanent

sysctl -w net.core.rmem_max=2500000

echo "net.core.rmem_max=2500000" >> /etc/sysctl.conf

Create an Unprivilleged Account for the Node Software

useradd -m -G docker storj

su - storj

# Check Docker works.

docker run hello-world

Create a Storj Identity

https://docs.storj.io/node/dependencies/identity

Install Storj Node Software

docker pull storjlabs/storagenode:latest

mkdir /home/storj/storj_node_disk

docker run --rm -e SETUP="true" \

--mount type=bind,source="/home/storj/.local/share/storj/identity/storagenode",destination=/app/identity \

--mount type=bind,source="/home/storj/storj_node_disk",destination=/app/config \

--name storagenode storjlabs/storagenode:latest

First Time Start

docker run -d --restart unless-stopped --stop-timeout 300 \

-p 28967:28967/tcp -p 28967:28967/udp -p 127.0.0.1:14002:14002 \

-e WALLET="0x0000000000000000000000000000000000000000" \

-e EMAIL="your@email.com" \

-e ADDRESS="<Internet_fqdn>:28967" \

-e STORAGE="500GB" \

--mount type=bind,source="/home/storj/.local/share/storj/identity/storagenode",destination=/app/identity \

--mount type=bind,source="/home/storj/storj_node_disk",destination=/app/config \

--name storagenode storjlabs/storagenode:latest

Common Commands

docker logs storagenode

docker stop -t 300 storagenode

docker start storagenode

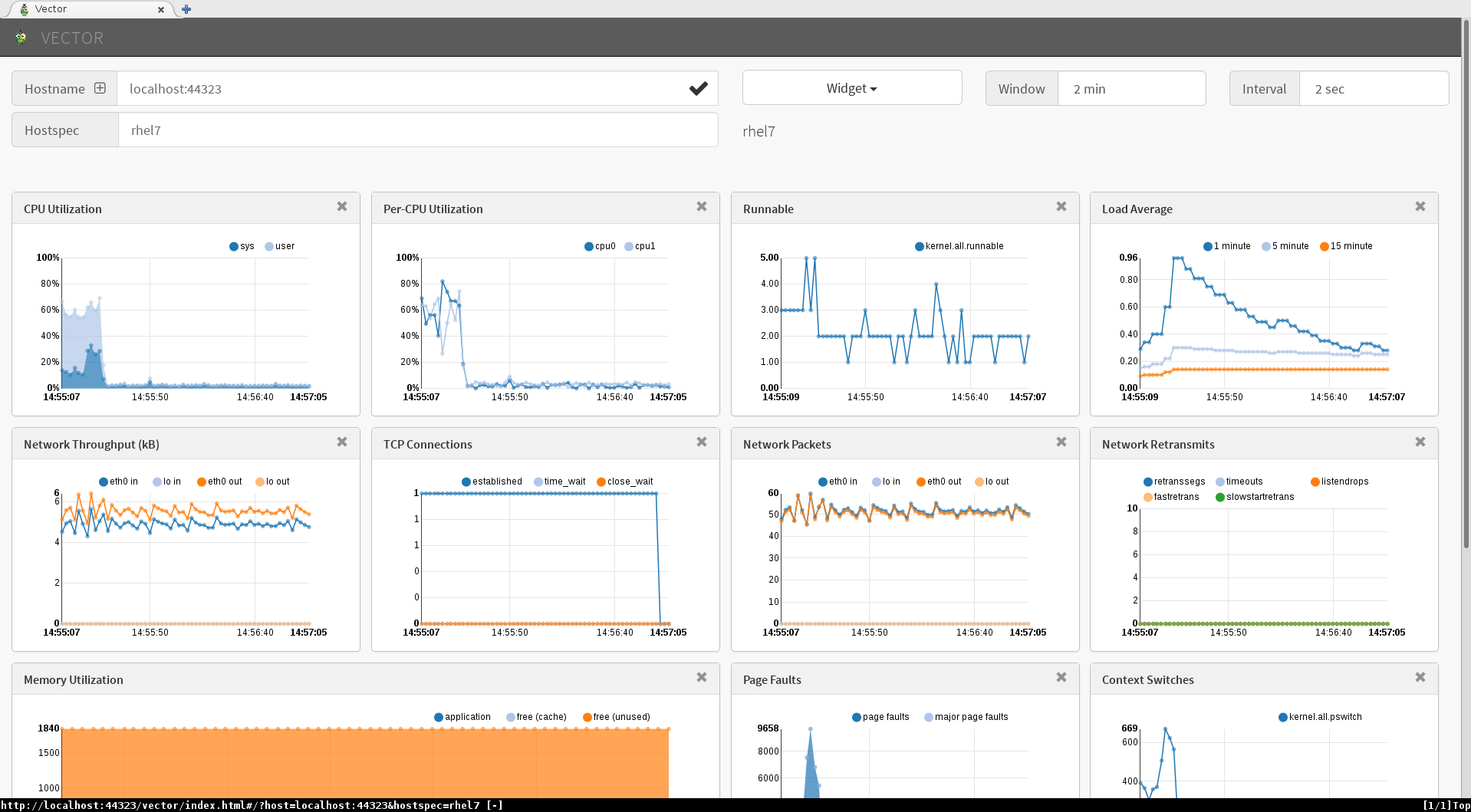

Dashboards

CLI

docker exec -it storagenode /app/dashboard.sh

Web

The command above that started the Storj software limited the Web UI to localhost. For the paranoid, establish an SSH tunnel that port forwards to 14002 on the storj host. This will probably mean enabling SSH port forwarding and restarting SSH.

ssh -L 127.0.0.1:14002:127.0.0.1:14002 root@<storj_host>

Web browse to http://127.0.0.1:14002/

Written with StackEdit.